Developer Conference 2019, also known as DevCon 2019, took place in Mauritius from 11 – 13 April 2019. Once again, Jochen Kirstätter and his team at the Mauritius Software Craftsmanship Community worked fantastically to make this event a reference throughout the region. All geeks of Mauritius and a few from neighbouring countries made it a must to attend and this means that speakers had quite a lot of pressure this year to deliver!

Since the very beginning, I knew that I was going to speak about Artificial Intelligence. As I told the audience, my (selfish) reason was for me to know more: the best way to learn is to teach. Of course, I also wanted other people to know more. Hence, my focus of starting from the fundamentals and demystifying everything. The full code is on Github.

For logistical reasons, I chose to do two presentations.

Presentation #1: How Deep Learning Works

This is what I submitted to Jochen and his colleagues:

Everyone is talking of Artificial Intelligence today as the next Big Thing! This session explains, from the point of view of a programmer, what a Neuron is, how a Neural Network can be built and how to use frameworks such as TensorFlow and TFLearn to quickly experiment with Deep Learning.

I was fortunate to have a good photographer, Sumeet Mudhoo, present at the beginning of my talk and he kindly gave me permission to use a few of his photos to illustrate this post.

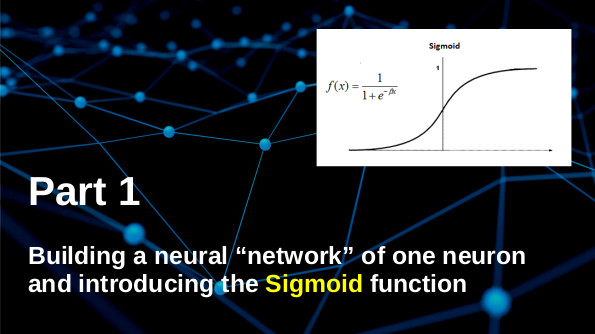

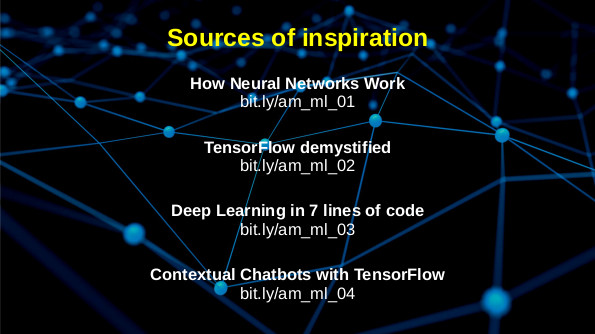

I started with how a simple artificial neuron capable of learning works. As I always do, I like to stand on the shoulders of giants and, therefore, I based this part of my presentation on an article found online: How Neural Networks Work. The programming part is fascinating. One neuron is just a simple function which takes some inputs and a corresponding number of weights and creates one output (generally by doing a dot product). The answer therefore can vary a lot. Using a Sigmoid function as the one pictured above makes sure the neuron can only produce an answer between 0 and 1 (which is perfect for digital computers). Another benefit is that, because of the shape of the Sigmoid function, extremes are ignored.

Another fascinating aspect of the code is the learning process. At the beginning, the weights of the neuron are far from what they should be and, consequently, the result produced is not very good (compared to the expected results). The distance between the two is then calculated and this distance is then used to refine the weights. One beautiful aspect of this learning process is that the derivative of the Sigmoid is used, once again to ignore extremes.

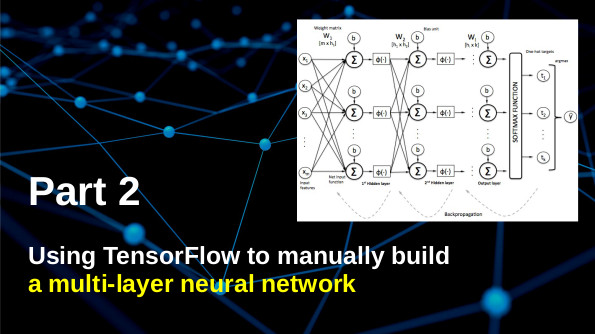

I then moved to showing the audience how the Tensorflow library, created by Google, works and can be used to create a neural network: a network of many neurons arranged in layers (one input layer, one output layer and 1-2 hidden layers, hence “deep” learning).

Once again, I relied on a online post, this time Tensorflow demystified. Tensorflow is quite complex to use as a programmer needs to be very explicit in the way the neural network is expressed.

This is, in essence, how a neural network is built in Tensor flow:

# hidden layers and their nodes

n_nodes_hl1 = 32

n_nodes_hl2 = 32

# classes in our output

n_classes = 2

# random weights and bias for our layers

# Initialize weights and biases with random values.

# We also define our output layer.

hidden_1_layer = { 'f_fum': n_nodes_hl1,

'weight': tf.Variable(tf.random_normal([len(train_x[0]), n_nodes_hl1])),

'bias': tf.Variable(tf.random_normal([n_nodes_hl1])) }

hidden_2_layer = { 'f_fum': n_nodes_hl2,

'weight': tf.Variable(tf.random_normal([n_nodes_hl1, n_nodes_hl2])),

'bias': tf.Variable(tf.random_normal([n_nodes_hl2])) }

output_layer = { 'f_fum': None,

'weight': tf.Variable(tf.random_normal([n_nodes_hl2, n_classes])),

'bias': tf.Variable(tf.random_normal([n_classes])) }

# Let's define the neural network:

# hidden layer 1: (data * W) + b

l1 = tf.add(tf.matmul(data, hidden_1_layer['weight']), hidden_1_layer['bias'])

l1 = tf.sigmoid(l1)

# hidden layer 2: (hidden_layer_1 * W) + b

l2 = tf.add(tf.matmul(l1, hidden_2_layer['weight']), hidden_2_layer['bias'])

l2 = tf.sigmoid(l2)

# output: (hidden_layer_2 * W) + b

output = tf.matmul(l2, output_layer['weight']) + output_layer['bias']

Phew! Lots and lots of lines of code which, obviously, is too prone to errors for someone not to have thought of creating a higher-level library which is easier to use.

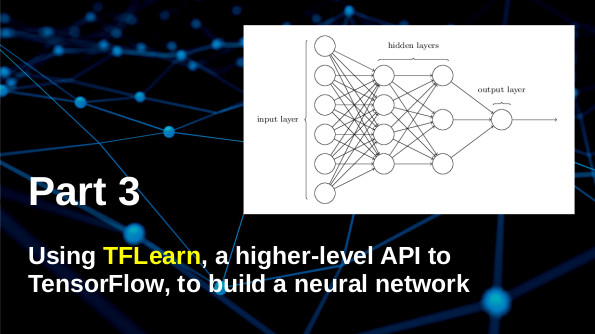

This is why I quickly transitioned to TFLearn, a higher-level API to Tensorflow, based yet again on another article: Deep Learning in 7 lines of code. Using TFLearn, the same neural network can easily be built like this:

net = tflearn.input_data(shape=[None, 5]) net = tflearn.fully_connected(net, 32) net = tflearn.fully_connected(net, 32) net = tflearn.fully_connected(net, 2, activation='softmax') net = tflearn.regression(net) # DNN means Deep Neural Network model = tflearn.DNN(net, tensorboard_dir='tflearn_logs')

The softmax function is a function that takes as input a vector of K real numbers, and normalizes it into a probability distribution consisting of K probabilities. That is, prior to applying softmax, some vector components could be negative, or greater than one; and might not sum to 1; but after applying softmax, each component will be in the interval (0,1), and the components will add up to 1.

As for the use of Linear Regression, it is a Supervised Learning algorithm which goal is to predict continuous, numerical values based on given data input. From the geometrical perspective, each data sample is a point. Linear Regression tries to find parameters of the linear function, so the distance between the all the points and the line is as small as possible. The algorithm used for parameters update is called Gradient Descent.

On arriving at this point, everyone present (including myself) understood what a neuron is, what is a neural network is and how to build one capable of learning using TFLearn. With this new knowledge, we were all ready to build a chatbot.

Presentation #2: Building a Chatbot using Deep Learning

This is what I submitted to Jochen and his colleagues:

Smart devices of today, powered by e.g. Google Assistant, Apple Siri or Amazon Alexa, can chat with us in quite surprising ways. It is therefore interesting for a developer to understand how chatbots work. In this session, we will build a chatbot using Deep Learning techniques.

Building a chatbot is quite straightforward using TFLearn, especially with high quality articles such as Contextual Chatbots with Tensorflow. The idea is to have a set of sentences and, for each, a set of possible responses. Now, in the past, this would have been done using a set of rigid if-then-else statements. Today, we tend to use two novel programming techniques.

Firstly, the sentences are not sentences actually. Rather they are patterns which are then matched with what the user is asking, using the Natural Language Toolkit. This is done to make sure that words are stemmed so that the chatbot becomes easier to interact with (in the sense that the user is not forced to use specific words or tenses).

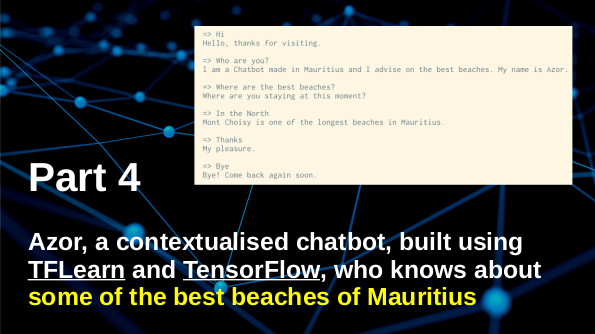

The second interesting part is that the chatbot is contextualised. In my example of a chatbot knowing about the beaches in Mauritius, here is an example interaction:

==> Where are the best beaches? Where are you staying at this moment? ==> In the North Mon Choisy is one of the longest beaches in Mauritius.

The second response is conditional to the first question (“What are the best beaches?”) being asked. This is done using training data such as the following (found on Github in full):

{

"tag":"beach",

"patterns":[

"Beach",

"Seaside",

"Place to swim"

],

"responses":[

"Where are you right now?",

"In which part of Mauritius do you plan to go?",

"Where are you staying at this moment?"

],

"context_set": "beach"

},

{

"tag":"beach_north",

"patterns":[

"North",

"Northern",

"Grand Baie"

],

"responses":[

"Trou aux Biches is shallow and calm, with gently shelving sands, making it ideal for families.",

"The water is deep at Pereybere but still very calm.",

"Mont Choisy is one of the longest beaches in Mauritius.",

"La Cuvette is a tucked-away jewel and one of the shortest beaches in Mauritius."

],

"context_filter":"beach"

}

The context_set happens as soon as the user asks for beach, seaside or place to swim (or variations therein) and the responses about the four beaches in the north will then be conditional on (1) the user saying that he is in the north, in the northern part of Mauritius or at Grand Baie and (2) that the context for beach was set previously.

The rest is just about creating a vector of 0’s and 1’s based on the input of the user (where each 0 and 1 correspond to whether or not one of the stem words are present in the input, out of all possible stem words from all patterns defined). This vector is then used to predict an output based on learning previously done and a response selected randomly. The complete code can be found in the article indicated previously.

At the end, I was happy that my two objectives had been attained: I knew more and the audience knew more. Perfect.

Leave a Reply